I heard someone asking today for support for Revocation of JWT, and I thought

about it a little, and decided I don’t see the point.

Specifically, I don’t see the point of the process described in this post regarding “Blacklisting JWT in express-jwt“. I believe that it’s possible to blacklist JWT. I just don’t see the point.

Let’s take a step back and look at OAuth

For those unaware, JWT refers to JSON Web Token, which is a type of token that can be used in APIs. The format of JWT is self-describing.

Here’s the key problem tokens address: how does a server decide whether to honor or reject a request? It’s a matter of authorization. OAuthV2 has been proposed and is now being used by the industry as the model or framework for enabling authorization in API-oriented apps. Basically it says, “give apps tokens, then grant access based on the token.”

Often the way things work under the OAuth framework is:

- an app running on a mobile phone connects to a token dispensary (a server) to request a token

- the server requires the client (==app) to provide some credentials before generating and dispensing a token. Sometimes the server also requires user authentication before token delivering a token. (This is done in the Authorization Code grant or the password grant.)

- the client app then sends this token to a different server to ask for services.

- the API server evaluates the token before granting service. Often this requires contacting the original token dispensary to see if the token is good, and to see if the token should be honored for the particular service being requested.

You can see there are three parties in the game: the app, the token dispensary, and the API server.

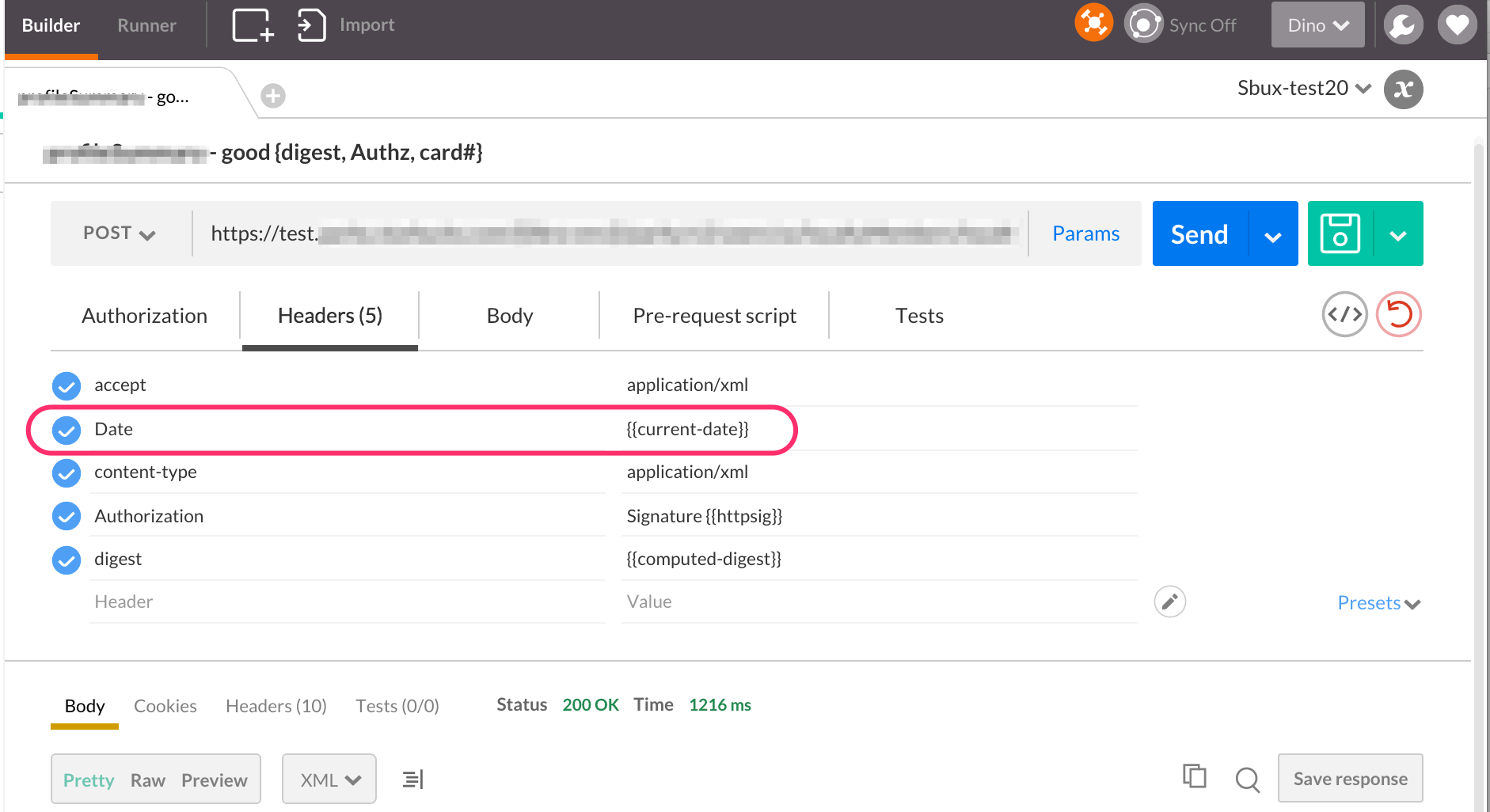

One handy optimization is to put the API endpoint behind an OAuth-aware proxy server, like Apigee Edge. (Disclaimer: I work for Apigee). The app then contacts Edge for a token (via POST /token). If the credentials are good, Edge generates and stores an opaque token, which looks like n06ztxcf2bRpN42cDwVUNvroGOO6tMdt, and delivers it back to the app. The app then requests service (via GET /service, or whatever), passing the previously obtained token. Edge sees this request, extracts the token within it, evaluates whether the token is good, and either passes the request through to the API endpoint or rejects it based on the token status.

The key thing: these tokens are opaque. The app doesn’t know what that token is, beyond a string of characters. The app cannot tell what the token is good for, unless it asks the token dispensary, which is the final arbiter. Sometimes when dispensing the token, the token dispensary also delivers metadata about the token, like: expiry, scopes, and other attributes. But that is not required, and not always done. So, bearer tokens are often opaque, and they are opaque by default in Apigee Edge.

And by “Bearer”, we mean… an app that possesses a token is presumed to “own” the token, and should be granted service based on that token alone. In other words, the token is a secret. It’s like cash money – if you lose it, someone else can spend it. But not exactly like cash. An opaque token is more like a promissory note or an IOU; to determine if it’s worth anything you need to go back to the issuing party, to ask “are you willing to pay up on this note?”

How is JWT different?

JWT is a different kind of OAuth token. OAuth is just a framework, and does not stipulate exactly the kind of token that needs to be generated and delivered. One type of token is the opaque bearer kind. JWT is an alternative format. Rather than being an opaque string, JWT is a self-describing format for bearer tokens. Generally, a JWT includes an encoded payload that can be decoded and read by anyone, and that payload contains a bunch of claims. The standard set of claims includes: when the token was generated (“issued at”), who generated it (the “issuer”), the intended audience, the expiry, and other things. JWT can include custom claims, such as “the user is a good person”. But more often the custom claim is: “this user is authorized to invoke /serviceA at endpoint http://example.com”, although this kind of claim is shortened quite a bit and is encoded in JSON, rather than in English.

Optionally accompanying that payload with its claims is a signature, which can be verified by any party possessing the public key used to sign it, (or, when using secret key encryption, the secret key). This is what is meant by “self describing”. The self-describing nature of JWT is the opposite of opaque. [JWT can be unsigned, can be signed, or can be encrypted. The encryption part is an optional part of the spec.]

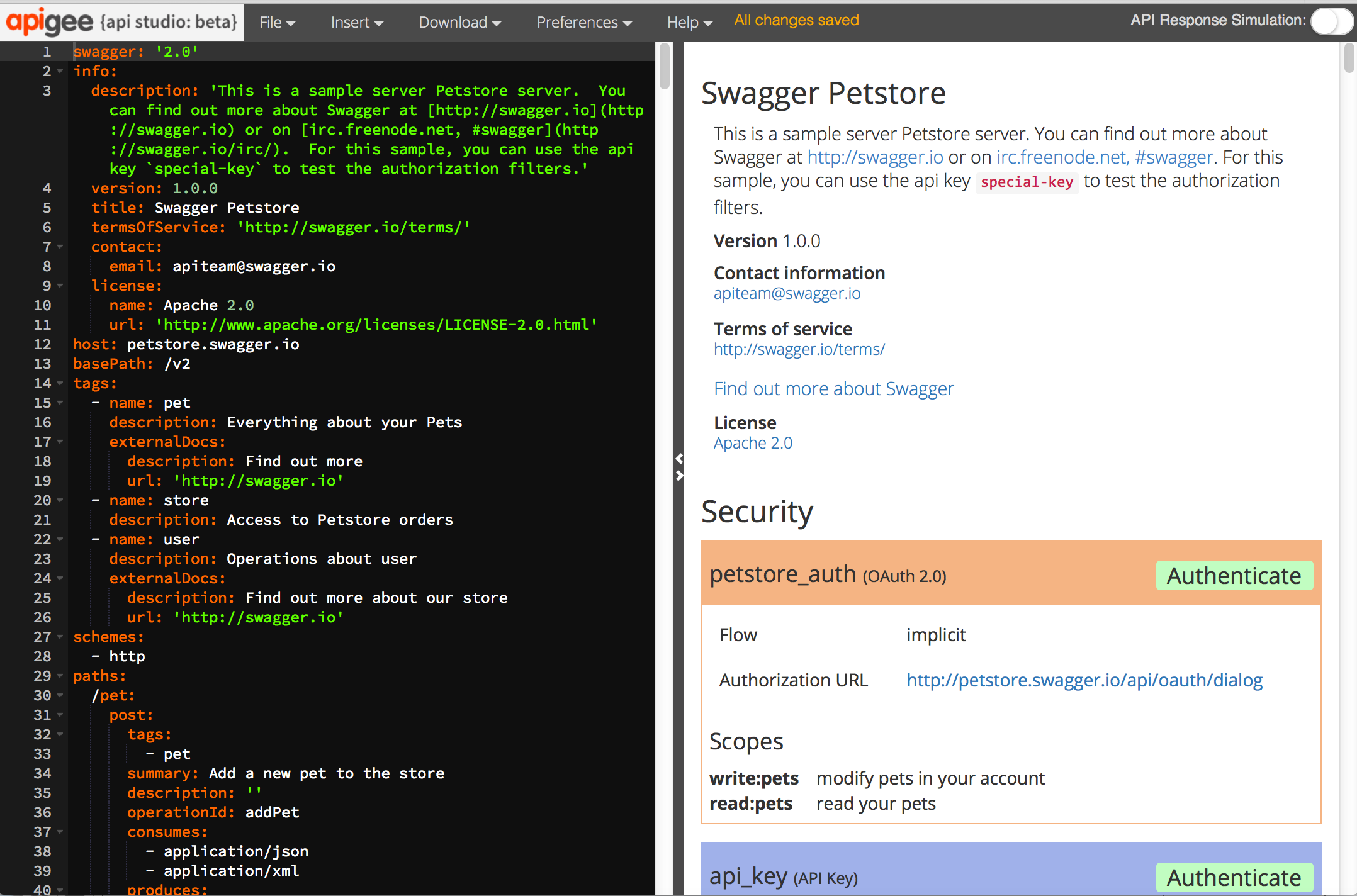

(Commercial message: I said above that Apigee Edge generates opaque bearer tokens by default. You can also configure Apigee Edge to generate signed JWT.)

Why Self-describing Tokens?

The main benefit of a model that uses self-describing tokens is that the API endpoint need not contact the token dispensary in order to determine if the token is good, not-expired, and if a request bearing such a token ought to be honored. In other words, JWT supports federation. One party issues the token, another party can verify it, without contacting the issuer. Remember, JWT is a bearer model, which means the possessor of the token is presumed to be authorized to get service based on what’s in the token. This is truly like cash money this time, because … when honoring a JWT, the API endpoint need not contact the issuer, just as when accepting a $20 bill, you don’t have to contact the US Treasury to see if the bill is worth $20.

So how ’bout Revocation of JWT?

This is a long story and I’m finally getting to the point: If you want JWT with powers to revoke the token, then you abandon the federation benefit.

Making the JWT self-descrbing means no honoring party needs to contact the issuer. Just verify the signature (verify the $20 bill is real), and then grant service. If you add in revocation as a requirement, then the honoring party then needs to contact the issuer: “I have $20 bill with serial number T128-DCQ-2872JKDJ; should I honor it?”

It means a synchronous call across the two parties. Which means federation is effectively broken. You abandon the federation benefit.

The corollary to the above is that you also still incur all the overhead of the JWT handling – the signing and verification. So you get all the costs of JWT and none of the benefits.

If revocation of bearer tokens is important to you, you could do the same thing with an opaque bearer token and eliminate all the fussy signature and validation stuff.

When you’re using an API Proxy server like Apigee Edge for both issuing and verifying+validating tokens, then there is no expensive additional remote call to check the revocation status. But you still lack the federation benefit, and you still incur this signing and verification nonsense.

I think when people ask for the ability to handle JWT with revocation, they don’t really understand what they’re asking.