I previously wrote about the black hole of submitting job applications electronically.

The Wall Street Journal explains how that phenomenon occurs.

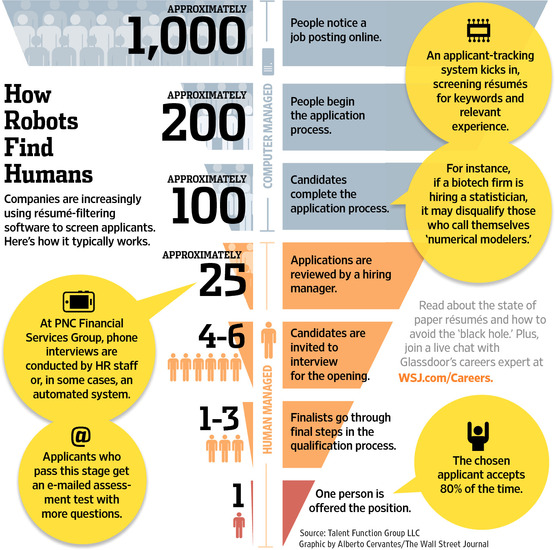

To cut through the clutter, many large and midsize companies have turned to [automatic] applicant-tracking systems to search résumés for the right skills and experience. The systems, which can cost from $5,000 to millions of dollars, are efficient, but not foolproof.

Efficient, but not foolproof? Is that a way of saying “Fast, but wrong”?

If a system very efficiently does the wrong thing, well it seems like not a very good system.

WSJ goes on to say,

At many large companies the tracking systems screen out about half of all résumés, says John Sullivan, a management professor at San Francisco State University.

All well and good. I understand that it’s expensive to even evaluate people for a job, and if a company gets thousands of resumes they need some way of managing their way through the noise.

On the other hand it sure seems like a bunch of good candidates are being completely ignored. There are a lot of false negatives.

It sure seems to me that the system isn’t working, the way it is now. Companies can rely on recruiters, but that can be very expensive. They can rely on online job boards, but that’s a frustrating recipe for lots of noise.

Thankfully though, not all companies rely solely on online job submission forms, in order to source candidates.