A provocative essay came up on Hacker News today, entitled RESTful considered harmful.

The summary of the essay:

- JSON is bloated in comparison to protobufs and similar binary protocols

- There are no interface contracts or data schema

- HATEOAS doesn’t work

- No direct support for batching, paging, sorting, etc – eg no SQL semantics

- CRUD is too limited

- No, really, CRUD is too limited

- HTTP Status codes don’t naturally map to business semantics

- there’s no queueing, or asynchrony

- There are no standards

- Backward compatibility is hard

Let’s have a look at the validity of these concerns.

1. JSON is bloated in comparison to protobufs

The essay cites “one tremendous advantage of JSON”: human readability, and then completely discounts this advantage by saying that it’s bloated. It really is a tremendous advantage, which is why XML won over MQ’s binary protocol and the XDR from Sun RPC, and the NDR from DCE RPC, and every other frigging binary protocol. And readability is why JSON displaced XML.

Ask yourself this: what is the value of readability versus the performance advantages of the alternatives, like Thrift or protobufs? Is readability worth 1x as much as the improved efficiency you might get with protobufs? 2x? I believe that for many people, its worth 100x. It trumps all other. For uber-experts, it’s deceptively attractive to wave away the advantage of human-readability. For the rest of the world, for 97% of developers, it’s a huge, Huge, HUGE advantage. For high speed financial trades, JSON is wrong. For Google’s internal interfaces, wrong. For most of the world, RIGHT.

AND as the essay notes, REST doesn’t prescribe JSON. Or XML. Or anything. There’s a content-type header, and clients and servers can negotiate it. If the client says Accept: application/x-protobuf, and the server can send it, bliss for you. So this point – “JSON is bloated” – is not only not valid (false) in the first place, it’s also not an argument against REST.

2. There are no interface contracts or data schema

This is a feature. OMG, have we not tried this enough times? Did this guy skip his “History of IDL compilers” course in the Computer History department at school? Sun RPC IDL. DCE RPC IDL. Corba IDL. WSDL, ferpeetsake! XML Schema!!

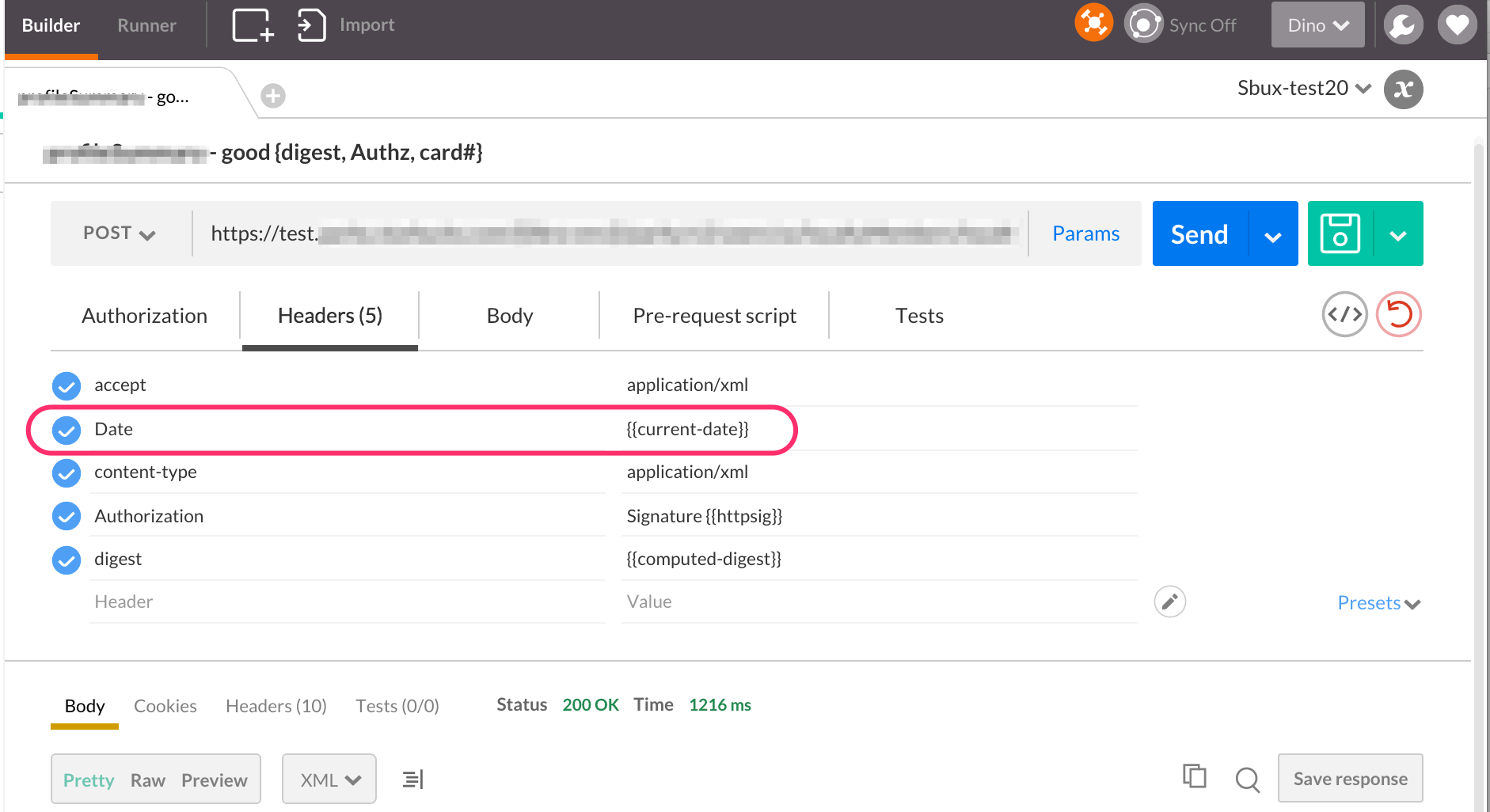

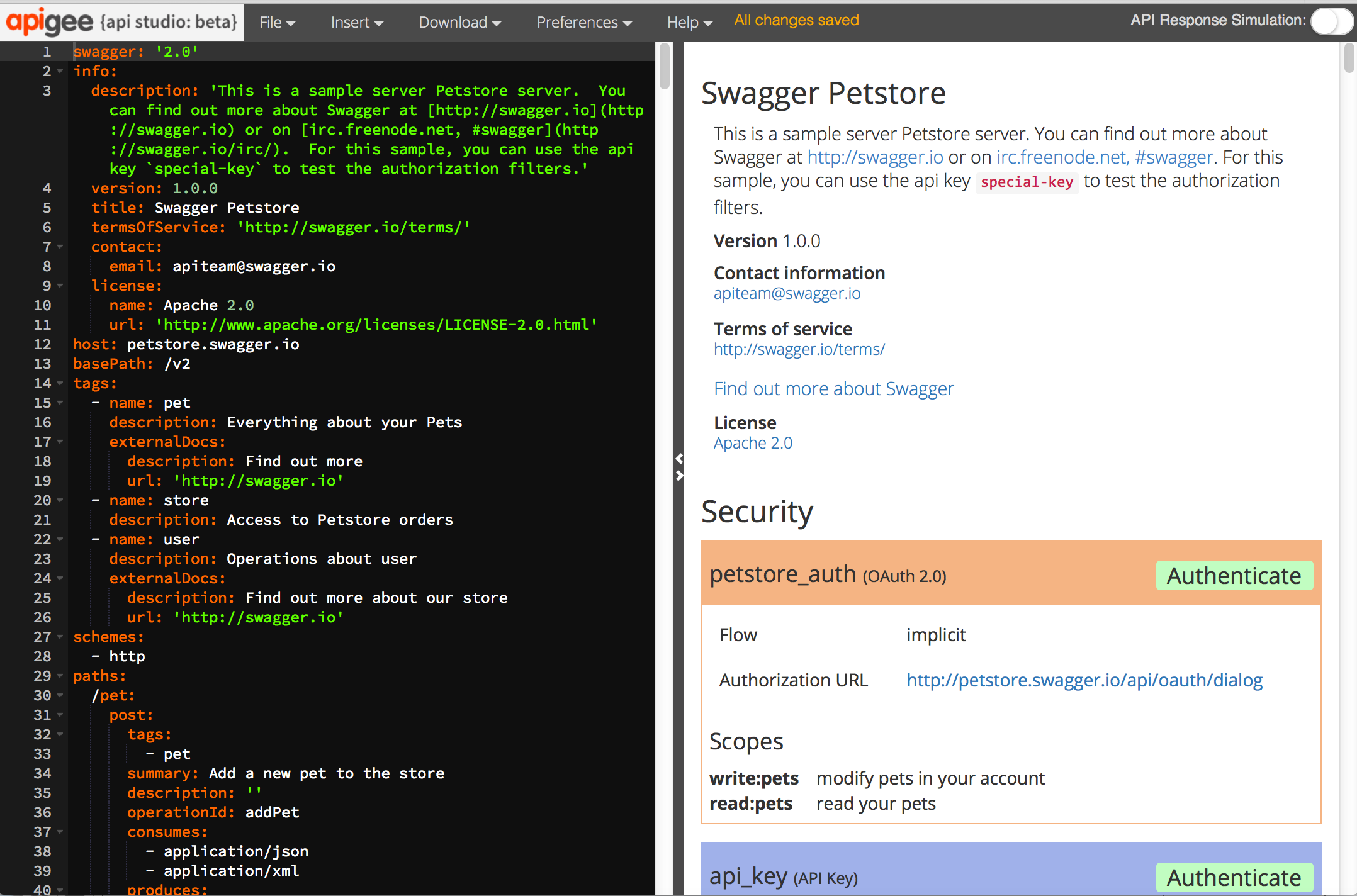

It’s pretty straightforward to deliver plain-old-XML over HTTP, which is quite RESTful. More popular is JSON-over-HTTP. Either of those have schema languages. Few people embrace them, though. Why? Because IDLs and Schema languages are too much structure, and they handcuff people more than help them. We have fortunately learned from the past. There are more tools coming in this area, for those who wish to embrace them. See apistudio.io .

3. HATEOAS doesn’t work

Mmmmm, yep. No argument here. In my experience, nobody really uses this, in practice. Pragmatic REST is what people do, and it generally does not use HATEOAS.

4. no SQL semantics

Uhhuh, true. This has been addressed with things like OData. If you want SQL Semantics, seek solutions, don’t just complain.

5. CRUD is too limited

Really? This is a problem? That you might need a switch statement in your code to handle different types of events? Really?

6. CRUD is really too limited

….

Mmmmm, sorry. I have to stop now. I’m completely bored of responding to this essay by now. Except for one more:

10. Backward compatibility is hard

This has NOTHING to do with REST. This is just true. Back compat in any interface is tricky.

In summary, I don’t find any of the arguments compelling.

Let me draw an analogy. The position in this essay is like saying “Oil is no good as a transportation fuel.” Now, Oil has it’s drawbacks! Oil is dirty. We can imagine alternatives that are better in theory. Even today, in specific local situations (daily use, short trips, urban travel) electric cars are better, MUCH better, than fossil-fuel based cars. (An bicycles are even better than electric cars) But gasoline-powered cars deliver massive utility to billions of people. Gasoline refueling stations are everywhere. The delivery system for gasoline is mature and redundant. The World RUNS, very effectively, on gasoline-powered transport, by and large. Objectively, Oil is VERY GOOD as a transportation fuel.

Sure, we’ll evolve better approaches in the future. That’s great. And sure, we can imagine a world with electric-powered vehicles. But today, in the world of reality, Oil wins.

And likewise Pragmatic REST, HTTP, JSON, and schema-less interfaces are winning. We’ll evolve better approaches. But today, This platform wins.

HTTP, HTML, Javascript, and JSON are ubiquitous, are the foundation of the web, and are not going anywhere. Any architect is free to choose other options, and they might have good reasons for doing so. On the other hand the vast majority of installations won’t benefit from using protobufs or thrift, or some non-HTTP protocol. Pragmatic REST, JSON and HTTP are very very safe choices in the vast majority of scenarios.

Cheers