Be sure to start with Part 1 of this series.

What’s Going on Here?

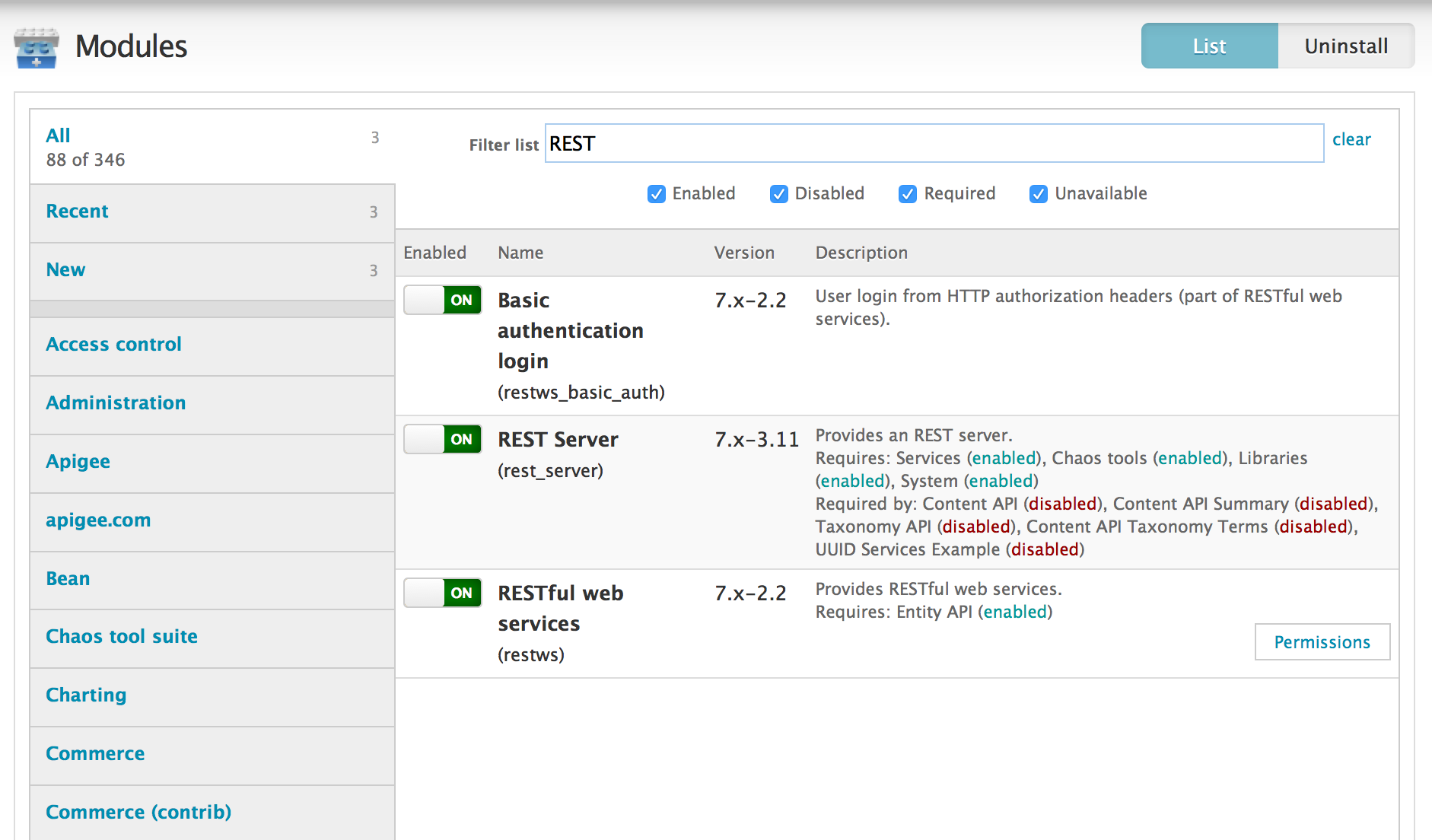

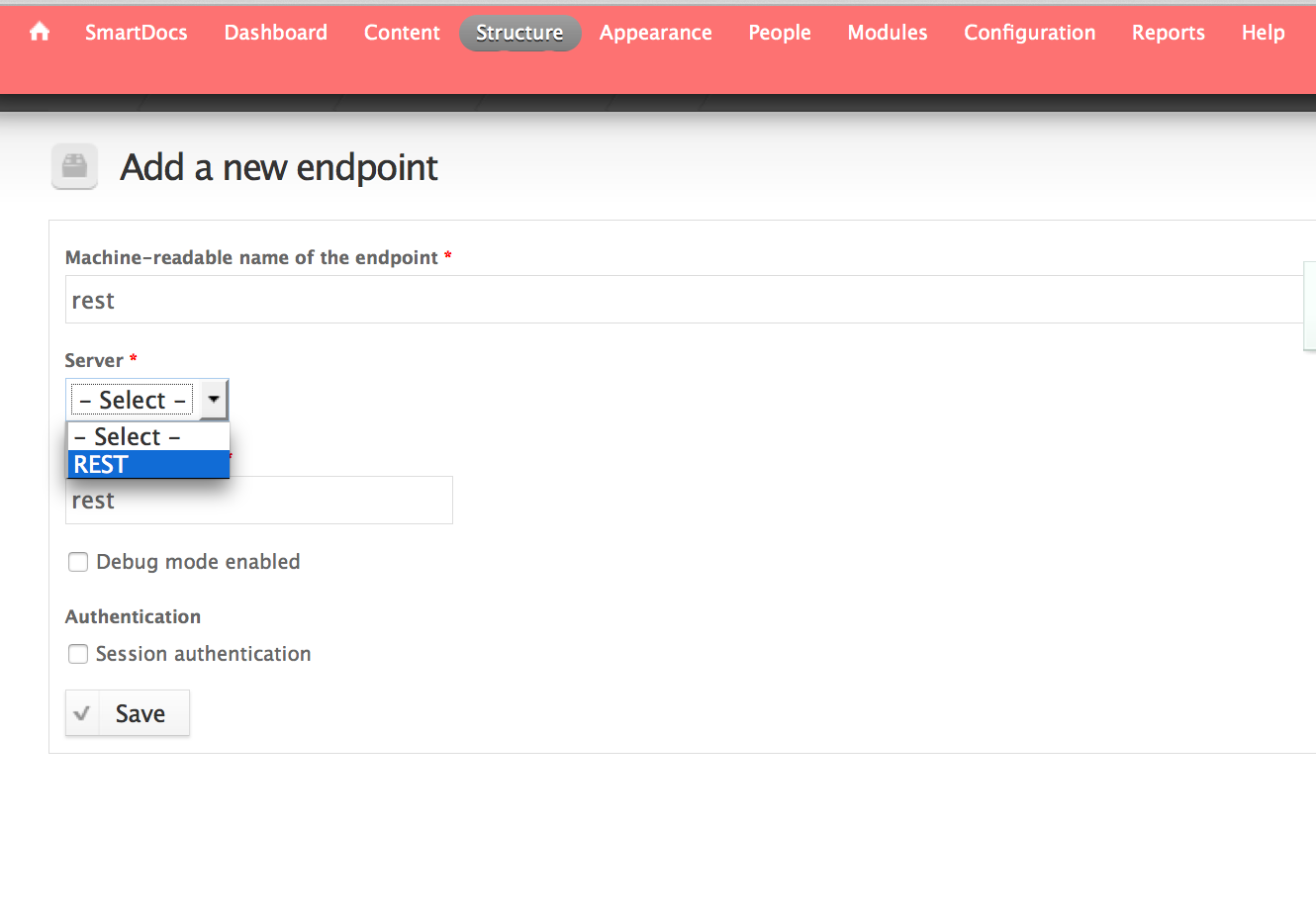

To recap: I’ve enabled the Services module in Drupal v7, in order to enable REST calls into Drupal, to do things like:

- list nodes

- create entities, like nodes, users, taxonomy vocabularies, or taxonomy terms

- delete or modify same

Clear? The prior post talks about the preparation. This post talks about some of the actual REST calls. Let’s start with Authentication.

Authentication

These are the steps required to make authenticated calls to Drupal via the Services module:

- Obtain a CSRF token

- Invoke the login API, passing the CSRF token.

- Get a Cookie and new token in response – the cookie is of the form {{Session-Name}}={{Session-id}}. Both the session name and id are returned in the json payload as well, along with a new CSRF token.

- Pass the cookie and the new token to all future requests

- Logout when finished, via POST /user/logout

The Actual Messages

OK, Let’s look at some example messages.

Get a CSRF Token

Request:

curl -i -X POST -H content-type:application/json \

-H Accept:application/json \

http://example.com/rest/user/token

The content-type header is required, even though there is no payload sent with the POST.

Response:

HTTP/1.1 200 OK

Cache-Control: no-cache, must-revalidate, post-check=0, pre-check=0

Content-Type: application/json

Etag: "1428629440"

Expires: Sun, 19 Nov 1978 05:00:00 GMT

Last-Modified: Fri, 10 Apr 2015 01:30:40 GMT

Vary: Accept

Content-Length: 55

Accept-Ranges: bytes

Date: Fri, 10 Apr 2015 01:30:51 GMT

Connection: keep-alive

{"token":"woalC7A1sRzpnzDhp8_rtWB1YlXBRalWMSODDX1yfUI"}

That’s a token, surely. I haven’t figured out what I need that token for. It’s worth pointing out that you get a new CSRF token when you login; see below. So I don’t do anything with this token. I never use the call to /rest/user/token .

Login

To do anything interesting, your app needs to login; aka authenticate. After login, your app can invoke regular transactions, using the information returned in that response. Let’s look at the messages.

Request:

curl -i -X POST -H content-type:application/json \

-H Accept:application/json \

http://example.com/rest/user/login \

-d '{

"username" : "YOURUSERNAME",

"password" : "YOURPASSWORD"

}'

Response:

HTTP/1.1 200 OK

Content-Type: application/json

Expires: Sun, 19 Nov 1978 05:00:00 GMT

Last-Modified: Fri, 10 Apr 2015 01:33:35 GMT

Set-Cookie: SESS02caabc123=ShBy6ue5TTabcdefg; expires=Sun, 03-May-2015 05:06:55 GMT; path=/; domain=.example.com; HttpOnly

...

{

"sessid": "ShBy6ue5TTabcdefg",

"session_name": "SESS02caabc123",

"token": "w98sdb9udjiskdjs",

"user": {

"uid": "4",

"name": "YOURUSERNAME",

"mail": "YOUREMAIL@example.com",

"theme": "",

"signature": "",

"signature_format": null,

"created": "1402005877",

"access": "1426280563",

"login": 1426280601,

"status": "1",

"timezone": null,

"language": "",

"picture": "0",

"data": false,

"uuid": "3e1e948e-940e-4a05-bd7a-267c6671c11b",

"roles": {

"2": "authenticated user",

"3": "administrator"

},

"field_first_name": {

"und": [{

"value": "Dino",

"format": null,

"safe_value": "Dino"

}]

},

"field_last_name": {

"und": [{

"value": "Chiesa",

"format": null,

"safe_value": "Chiesa"

}]

},

"metatags": [],

"rdf_mapping": {

"rdftype": ["sioc:UserAccount"],

"name": {

"predicates": ["foaf:name"]

},

"homepage": {

"predicates": ["foaf:page"],

"type": "rel"

}

}

}

}

There are a few data items that are of particular interest.

Briefly, in subsequent calls, your app needs to pass back the cookie specified in the Set-Cookie header. BUT, if you’re coding in Javascript or PHP or C# or Java or whatever, you don’t need to deal with managing cookies, because the cookie value is also contained in the JSON payload. The cookie has the form {SESSIONNAME}={SESSIONID}, and those values are provided right in the JSON. With the response shown above, subsequent GET calls need to specify a header like this:

Cookie: SESS02caabc123=ShBy6ue5TTabcdefg

Subsequent PUT, POST, and DELETE calls need to specify the Cookie as well as the CSRF header, like this:

Cookie: SESS02caabc123=ShBy6ue5TTabcdefg

X-CSRF-Token: w98sdb9udjiskdjs

In case it was not obvious: The value of the X-CSRF-Token is the value following the “token” property in the json response. Also: your values for the session name, session id, and token will be different than the ones shown here. Just sayin.

Get All Nodes

OK, the first thing to do once authenticated: get all the nodes. Here’s the request to do that:

Request:

curl -i -X GET \

-H Cookie:SESS02caabc123=ShBy6ue5TTabcdefg \

-H Accept:application/json \

http://example.com/rest/node

The response gives up to “pagesize” elements, which defaults to 20 on my system. You can also append a query parameter ?pagesize=30 for example to increase this. To repeat: you do not need to pass in the X-csrf-token header here for this query. The CSRF token is required for Update operations (POST, PUT, DELETE). Not for GET.

Here’s the response:

[{

"nid": "32",

"vid": "33",

"type": "wquota3",

"language": "und",

"title": "get weather for given WOEID (token)",

"uid": "4",

"status": "1",

"created": "1425419882",

"changed": "1425419904",

"comment": "1",

"promote": "0",

"sticky": "0",

"tnid": "0",

"translate": "0",

"uuid": "9b0b503d-cdd2-410f-9ba6-421804d25d4e",

"uri": "http://example.com/rest/node/32"

}, {

"nid": "33",

"vid": "34",

"type": "wquota3",

"language": "und",

"title": "get weather for given WOEID (key)",

"uid": "4",

"status": "1",

"created": "1425419882",

"changed": "1425419904",

"comment": "1",

"promote": "0",

"sticky": "0",

"tnid": "0",

"translate": "0",

"uuid": "56d233fe-91d4-49e5-aace-59f1c19fbb73",

"uri": "http://example.com/rest/node/33"

}, {

"nid": "31",

"vid": "32",

"type": "cbc",

"language": "und",

"title": "Shorten URL",

"uid": "4",

"status": "0",

"created": "1425419757",

"changed": "1425419757",

"comment": "1",

"promote": "0",

"sticky": "0",

"tnid": "0",

"translate": "0",

"uuid": "8f21a9bc-30e6-4232-adf9-fe705bad6049",

"uri": "http://example.com/rest/node/31"

}

...

]

This is an array, which some people say should never be returned by a REST resource. (Because What if you wanted to add a property to the response? Where would you put it?) But anyway, it works. You don’t get ALL the nodes, you get only a page worth. Also, you don’t get all the details for each node. But you do get the URL for each node, which is your way to get the full details of a node.

What if you want the next page? According to my reading of the scattered Drupal documentation, these are the query parameters accepted for queries on all entity types:

- (string) fields – A comma separated list of fields to get.

- (int) page – The zero-based index of the page to get, defaults to 0.

- (int) pagesize – Number of records to get per page.

- (string) sort – Field to sort by.

- (string) direction – Direction of the sort. ASC or DESC.

- (array) parameters – Filter parameters array such as parameters[title]=”test”

So, to get the next page, just send the same request, but with a query parameter, page=2.

Get One Node

This is easy.

Request:

curl -i -X GET \

-H Cookie:SESS02caabc123=ShBy6ue5TTabcdefg \

-H Accept:application/json \

http://example.com/rest/node/75

Response:

HTTP/1.1 200 OK

Content-Type: application/json

...

{

"vid": "76",

"uid": "4",

"title": "Embedding keys securely into the app",

"log": "",

"status": "1",

"comment": "2",

"promote": "0",

"sticky": "0",

"vuuid": "57f3aade-d923-4bb5-8861-1d2c160a9fd5",

"nid": "75",

"type": "forum",

"language": "und",

"created": "1427332570",

"changed": "1427332570",

"tnid": "0",

"translate": "0",

"uuid": "026c029d-5a45-4e10-8aec-ac5e9824a5c5",

"revision_timestamp": "1427332570",

"revision_uid": "4",

"taxonomy_forums": {

"und": [{

"tid": "89"

}]

},

"body": {

"und": [{

"value": "Suppose I have received my key from Healthsparq. Now I would like to embed that key into the app that I'm producing for the mobile device. How can I do this securely, so that undesirables will not be able to find the keys or sniff the key as I use it?",

"summary": "",

"format": "full_html",

"safe_value": "Suppose I have received my key from Healthsparq. Now I would like to embed that key into the app that I'm producing for the mobile device. How can I do this securely, so that undesirables will not be able to find the keys or sniff the key as I use it?

\n",

"safe_summary": ""

}]

},

"metatags": [],

"rdf_mapping": {

"rdftype": ["sioc:Post", "sioct:BoardPost"],

"taxonomy_forums": {

"predicates": ["sioc:has_container"],

"type": "rel"

},

"title": {

"predicates": ["dc:title"]

},

"created": {

"predicates": ["dc:date", "dc:created"],

"datatype": "xsd:dateTime",

"callback": "date_iso8601"

},

"changed": {

"predicates": ["dc:modified"],

"datatype": "xsd:dateTime",

"callback": "date_iso8601"

},

"body": {

"predicates": ["content:encoded"]

},

"uid": {

"predicates": ["sioc:has_creator"],

"type": "rel"

},

"name": {

"predicates": ["foaf:name"]

},

"comment_count": {

"predicates": ["sioc:num_replies"],

"datatype": "xsd:integer"

},

"last_activity": {

"predicates": ["sioc:last_activity_date"],

"datatype": "xsd:dateTime",

"callback": "date_iso8601"

}

},

"cid": "0",

"last_comment_timestamp": "1427332570",

"last_comment_name": null,

"last_comment_uid": "4",

"comment_count": "0",

"name": "DChiesa",

"picture": "0",

"data": null,

"forum_tid": "89",

"path": "http://example.com/content/embedding-keys-securely-app"

}

As you know, in Drupal a node can represent many things. In this case, this node is a forum post. You can see that from the “type”: “forum”, in the response.

Querying for a specific type of node

Request:

curl -i -X GET \

-H Cookie:SESS02caabc123=ShBy6ue5TTabcdefg \

-H Accept:application/json \

'http://example.com/rest/node?parameters\[type\]=forum'

Request:

curl -i -X GET \

-H Cookie:SESS02caabc123=ShBy6ue5TTabcdefg \

-H Accept:application/json \

'http://example.com/rest/node?parameters\[type\]=faq

Request:

curl -i -X GET \

-H Cookie:SESS02caabc123=ShBy6ue5TTabcdefg \

-H Accept:application/json \

'http://example.com/rest/node?parameters\[type\]=article

The response you get from each of these is the same as you would get from the non-parameterized query (for all nodes). The escaping of the square brackets is necessary only for using curl within bash. If you’re sending this request from an app, you don’t need to backslash-escape the square brackets.

Logout

Request:

curl -i -X POST \

-H content-type:application/json \

-H Accept:application/json \

-H Cookie:SESS02caabc123=ShBy6ue5TTabcdefg \

-H X-csrf-token:xxxx \

http://example.com/rest/user/logout -d '{}'

Notes: The value of the cookie header and the X-csrf-token header are obtained from the response to the login call! Also, obviously don’t call Logout until you’re finished making API calls. After the logout call, the Cookie and X-csrf-token will become invalid; discard them.

Response:

HTTP/1.1 200 OK

...

[true]

Pretty interesting as a response.

More examples, covering creating things and deleting things, in the next post in this series.