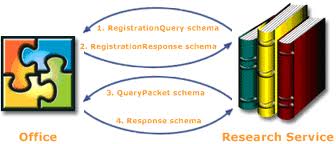

Last time, I covered the basics around Twitter’s use of OAuth. Now I will look at the protocol, or message flows. Remember, the basic need is to allow a user (resource owner) employing an application (what OAuth calls the client) to post a status message to Twitter (what OAuth calls the server).

There are two use cases: approve the app, and post a status message. Each use case has an associated message flow.

The first time a user tries to employ a client to post a status update to Twitter, the user must explicitly grant access to allow the client to act on its behalf with Twitter. The first step is to request a temporary token, what Twitter calls a “request token”, via a POST to https://api.twitter.com/oauth/request_token like this:

POST https://api.twitter.com/oauth/request_token HTTP/1.1

Authorization: OAuth oauth_callback="oob",

oauth_consumer_key="XXXXXXXXXXXXXX",

oauth_nonce="0cv1i19r",

oauth_signature="mIggVeqh58bsJAMyLsTrgeNq0qo%3D",

oauth_signature_method="HMAC-SHA1",

oauth_timestamp="1336759491",

oauth_version="1.0"

Host: api.twitter.com

Connection: Keep-Alive

In the above message, the Authorization header appears all on one line, with each named oauth parameter beginning with oauth_ separated from the next with a comma-space. It is shown with broken lines only to allow easier reading. That header needs to be specially constructed. According to OAuth, the signature is an HMAC, using SHA1, over a normalized string representation of the relevant pieces of the payload. For this request, that normalized string must include:

- the HTTP Method

- a normalized URL

- a URL-encoded form of the oauth_ parameters (except the “consumer secret” – think of that as a key or password), sorted lexicographically and concatenated with ampersand.

Each of those elements is itself concatenated with ampersands. Think of it like this:

Where the intended METHOD is POST or GET (etc), the URL is the URL of course (including any query parameters!), and the PARAMSTRING is a blob of stuff, which itself is the URL-encoded form of something like this:

oauth_callback=oob&oauth_consumer_key=xxxxxxxxxxxxxxx&

oauth_nonce=0cv1i19r&oauth_signature_method=HMAC-SHA1&

oauth_timestamp=1336759491&oauth_version=1.0

Once again, that is all one contiguous string – the newlines are there just for visual clarity. Note the lack of double-quotes here.

The final result, those three elements concatenated together (remember to url-encode the param string), is known as the signature base.

Now, about the values for those parameters:

- The callback value of ‘oob’ should be used for desktop or mobile applications. For web apps, let’s say a PHP-driven website that will post tweets on the user’s behalf, they need to specify a web URL for the callback. This is a web endpoint that Twitter will redirect to, after the user confirms their authorization decision (either denial or approval).

- The timestamp is simply the number of seconds since January 1, 1970. You can get this from PHP’s time() function, for example. In .NET it’s a little trickier.

- The nonce needs to be a unique string per timestamp, which means you could probably use any monotonically increasing number. Or even a random number. Some people use UUIDs but that’s probably overkill.

- The consumer key is something you get from the Twitter developer dashboard when you register your application.

- The other pieces (signature method, version), for Twitter anyway, are fixed.

That form must then be URL-encoded according to a special approach defined just for OAuth. OAuth specifies percentage hex-encoding (%XX), and rather than defining which characters must be encoded, OAuth declares which characters need no encoding: alpha, digits, dash, dot, underscore, and twiddle (aka tilde). Everything else gets encoded.

The encoded result is then concatenated with the other two elements, and the resulting “signature base” is something like this.

POST&https%3A%2F%2Fapi.twitter.com%2Foauth%2Frequest_token&

oauth_callback%3Doob%26oauth_consumer_key%3DXXXXXXXXXX%26

oauth_nonce%3D0cv1i19r%26oauth_signature_method%3DHMAC-SHA1%26

oauth_timestamp%3D1336759491%26oauth_version%3D1.0

The client app must then compute the HMACSHA1 for this thing, using a key derived as the concatenation of the consumer secret (aka client key) and the token secret, separated by ampersands. In this first message, there is no token secret yet, therefore the key is simply the consumer secret with an ampersand appended. Get the key bytes by UTF-8 encoding that result. Sign the UTF-8 encoding of the URL-encoded signature base with that key, then base64-encode the resulting byte array to get the string value of oauth_signature. Whew! The client needs to embed this signature into the Authorization header of the HTTP request, as shown above.

If the signature and timestamp check out, the server responds with a temporary request token and secret, like this:

HTTP/1.1 200 OK

Date: Fri, 11 May 2012 18:04:46 GMT

Status: 200 OK

Pragma: no-cache

...

Last-Modified: Fri, 11 May 2012 18:04:46 GMT

Content-Length: 147

oauth_token=vXwB2yCZYFAPlr4RcUcjzfIVW6F0b8lPVsAbMe7x8e4

&oauth_token_secret=T81nWXuM5tUHKm8Kz7Pin8x8k70i2aThfWVuWcyXi0

&oauth_callback_confirmed=true

Here again, the message is shown on multiple lines, but in response it will actually be all on one line. This response contains what OAuth calls a temporary token, and what Twitter has called a request token. With this the client must direct the user to grant authorization. For Twitter, the user does that by opening a web page pointing to https://api.twitter.com/oauth/authorize?oauth_token=xxxx, inserting the proper value of oauth_token.

This pops a web page that looks like so:

When the user clicks the “approve” button, the web page submits the form to https://api.twitter.com/oauth/authorize, Twitter responds with a web page, formatted in HTML, containing an approval PIN. The normal flow is for the web browser to display that form. The user can then copy that PIN from the browser, and paste it into the client application, to allow the client to complete the message exchange with Twitter required for approval. If the client itself is controlling the web browser, as with a Windows Forms WebBrowser control, then the client app can extract the PIN programmatically without requiring any explicit cut-n-paste step by the user.

The client then sends another message to Twitter to complete the authorization. Here again, the Authorization header is formatted specially, as described above. In this case the header must include oauth_token and oauth_verifier set to the token and the PIN received in the previous exchange, but it need not include the oauth_callback. Once again, the client must prepare the signature base and sign it, and then embed the resulting signature into the Authorization header. It looks like this:

POST https://api.twitter.com/oauth/access_token HTTP/1.1

Authorization: OAuth

oauth_consumer_key="xxxxxxxxx",

oauth_nonce="1ovs611q",

oauth_signature="24iNJYm4mD4FIyZCM8amT8GPkrg%3D",

oauth_signature_method="HMAC-SHA1",

oauth_timestamp="1336764022",

oauth_token="RtYhup118f01CWCMgbyPvwoOcCHk0fXPeXqlPVRZzM",

oauth_verifier="0167809",

oauth_version="1.0"

Host: api.twitter.com

(In an actual message, that Authorization header will be all on one line). The server responds with a regular HTTP response, with message (payload) like this:

oauth_token=59152613-PNjdWlAPngxOVa4dCWnLMIzZcUXKmwMoQpQWTkbvD&

oauth_token_secret=UV1oFX1wb7lehcH6p6bWbm3N1RcxNUVs3OEGVts4&

user_id=59152613&

screen_name=dpchiesa

(All on one line). The oauth_token and oauth_token_secret shown in this response look very similar to the data with the same names received in the prior response. But these are access tokens, not temporary tokens. The client application now has a token and token secret that it can use for OAuth-protected transactions.

All this is necessary when using an application for the first time, and it has not been authorized yet.

In the next post I’ll describe the message flows that use this token and secret to post a status update to Twitter.